This blog post introduces a novel coreference implementation for spaCy.

To understand the task of coreference, consider a fictional story populated by various characters. Throughout the story, these characters appear in various locations and face a variety of situations, as well as each other. The narrator refers to these characters, places and events in all sorts of ways. Similarly, authors of news articles, restaurant reviews or scientific articles refer to various different kinds of buildings, festivals, drug treatments and much more. Our everyday discourse with colleagues, friends and family is comprised of mentioning various things and then referring back to those things in a different way at a later time.

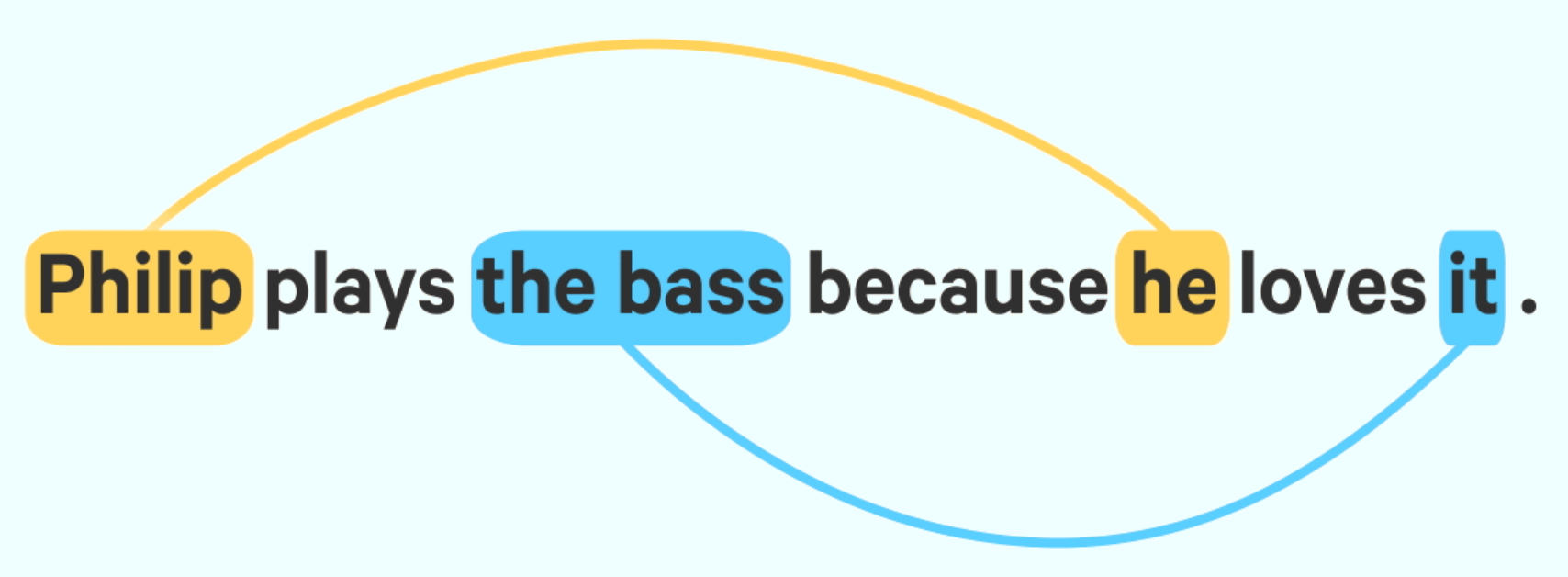

When an entity or an event has already been introduced in the discourse and we mention it later, that’s called coreference. In other words, when two expressions refer to the same thing, we say that they corefer.

The coreference resolution system we release in spacy-experimental v0.6.0 is an end-to-end neural system applicable across a wide variety of entity coreference problems. We’ve based our implementation on a recent incarnation of the neural paradigm published in the paper “Word-Level Coreference Resolution” by Vladimir Dobrovolskii, which was published in EMNLP 2021. We’ve also released the transformer-based English coreference pipeline trained on OntoNotes, which we currently call en_coreference_web_trf. You can find it here, and example training code can be consulted here.

→ Authors: Ákos Kádár, Paul O’Leary McCann, Richard Hudson, Edward Schmuhl, Sofie Van Landeghem, Adriane Boyd, Madeesh Kannan, Victoria Slocum

→ Blog post: Full post

→ Original paper by Dobrovolskii: EMNLP 2021